Video Vitals: Stabilization

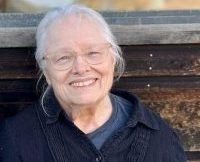

Pictured here is a remote-controlled camera stabilizer mounted on a rail.

Over the existence of personal cameras, programs and devices have been developed to make handheld footage easier to watch. While most previous iterations of these devices have relied on bulky mechanical arms, newer renditions include technology built into the camera lens itself, using a microcomputer to counteract shaking and imperfect transitions by moving the lens precisely. This device works to make human movement into a perfect linear arc that computers can understand. This technology is known as video stabilization.

The first iteration of video stabilization technology appeared in the form of the Steadicam in 1975. It was invented by Garret Brown and trademarked by Cinema Products Corporation. In front of an audience, the Steadicam was demonstrated, as the name suggests, to perform steady camera movements autonomously using a finely calibrated mount that functioned like a robotic arm. This technology was utilized in many films and media. However, it only applies to cinematic cameras with overcomplicated and obnoxiously sized machinery.

When the first personal camera was made public on the market, it took a while for stabilization to catch up. The first stabilization technology for handheld cameras was introduced in 1991, and it was known as the Glidecam. This company is still going strong today, producing professional-grade cameras and photography equipment with stabilization technology built into the lens.

When Youtube went online and video sharing began rising in popularity, new corporations began advertising their own software to stabilize existing footage. This wasn’t as easy as having the tech built into the camera- now a computer had to watch the video and figure out what was happening, where the camera was, where it’s going, and where it stops, and make that all into a smooth linear movement that humans can watch. The first iterations were messy, for sure- all the failed prototypes are probably locked in a safe somewhere- but the first functional build had one-point tracking. The user would show the computer the video, and the computer, occasionally with help, would find the brightest, sharpest, or most noticeable point of the frame, something that was easy to track and visible throughout the entire video. It would lock onto that point and keep it there for the entire video. The video could move around it, but that point it’s focused on would not move. This worked alright for a while, but it hit a wall when the video started to rotate or move too much. If the tracking point was obscured or moved out of frame, the technology failed. How do we fix this? Well, add another point.

The next step was to give the computer more things to focus on. Two-point tracking was made public: this new version had two points that the computer would focus on and draw a line between, making sure that line and those two points were rock solid throughout the entire video. Once again, this system struggled with pitch (vertical rotation) and yaw (horizontal rotation). The computer couldn’t figure out how to stretch and squash the frame without making the image incomprehensible. The natural next step was to get the computers on this.

And so, Adobe created a new form of stabilization called Subspace Warp. This technology could squash and stretch sections of the frame to stabilize the video, something barely noticeable unless you paid attention. When more observant viewers pointed these small details out to the audience, the influence of the program over the frame of the video was much more obvious. Some finer details may have been blurred or distorted, but it wasn’t too bad. But even then, programmers knew they could still do better. The next generation of video stabilization came from Youtube.

Youtube stabilization was very popular in handheld footage uploaded to the site until 2018 when built-in stabilization resurfaced and became more accessible. Even now, some people argue that Youtube’s stabilization technology proved to be more effective than modern stabilization in cameras. Youtube’s new program was explained in a Google AI Blog, in which the development of the program was detailed to the public. According to the article, this program utilized a 3D mockup of the area it sees in the video and then attempted to replicate what was happening in the video using the mockup and a digital camera, smoothing out the movement as it was being animated. Using either constant, linear, or parabolic keyframes in the recreation, it smoothly animated the camera between these keyframes, then locked it at the keyframe’s end until another movement occurred. It then took this digital footage and applied it to the original video, cropping and rotating the frame to match the mockup footage. This was the most advanced technology for a while until the aforementioned microcomputers came along.

Nowadays, video stabilization is happening within the camera itself. A small microcomputer connected to the lens of the camera utilizes a floating lens element that the computer has full control over. It can rotate the lens side to side, roll it up, down, or sideways, and zoom in and out precisely to counteract the unpredictable movement of handheld footage and translate that into smooth footage while recording. This is a blend of digital and physical stabilization, in that it uses precise technology to physically alter the lens. This is the best we have for now, but it’s still just another generation of video stabilization. Wait a few more years, and another new and brilliant technology could be on its way.